RAG vs Fine-Tuning: The Big Choice for Smarter LLMs in 2025

Are you too tired of switching and experimenting with hundreds of LLMs? Well, in 2025, optimizing these Large Language Models is no longer a hustling job. In fact, you end up entering a world of smarter workflows for startups with clear decisions and a science fiction level of digital experiences. But here's the catch: optimizing and making a choice for RAG vs Fine-tuning is not for the weak-hearted.

Conventionally, fine-tuning was the default language of many. You just needed to sprinkle the data, retrain the model, and keep your fingers crossed for the desired results. But does that work? Absolutely, it does, but it ends up draining your time, money, efforts, and energy altogether.

That's exactly where RAG (Retrieval-Augmented Generation) saves the day by its heroic entrance. This gives your model a makeover by accessing live knowledge instead of any repetitive training. But the big question is, should you hold back on fine-tuning, or move on to the RAG?

As per the recent source, a comparison of Retrieval-Augmented Generation (RAG) and parameter-efficient fine-tuning (PEFT) found that RAG improves over a non-personalized LLM by ~14.92%.

This blog breaks down how RAG implementation services have become the need of the hour, why fine-tuning and RAG really stack up, and in the end, the answer might just change the way you build with AI.

How Does RAG Retrieve Information?

You might be aware of the two most popular approaches and rivals in the LLM world- retrieval-augmented generation vs fine-tuning. They both have the same aim of improving the AI performance of models, but with very different takes. Just imagine RAG as giving your model an open-book exam; meanwhile, Fine-Tuning makes it memorize the entire syllabus. Here’s how they really stack up while comparing rag vs fine-tuning scalability & accuracy:

1. Real-Time Flexibility

This provides your model with all the flexibility in the world and incorporates real-time knowledge into it. RAG allows the model to retrieve data from other resources in times of need, which keeps your answers regulated, updated, and full of context.

2. Fine-Tuning

This is a method of learning that provides permanent memory to your model. With the help of fine-tuning, you retrain the model on your specific data so that it permanently learns your tone, knowledge base, and style.

3. Data Retrieval

Well, initially, it retrieves the relevant and required information from the documents and then creates a response for you, aided by its intelligence. This is like openly cheating in an exam and passing with flying colors.

4. Permanent Accuracy

When you consider accuracy, RAG wins the contest with its constantly changing domains and comes out with the best and accurate answers. Even though fine-tuning is a master of its own field, it provides precise answers for specialized inputs that resemble its prior knowledge.

5. Scalability Considerations

Now, when we talk about scalability, RAG is just built to scale and expand with the knowledge and external data availability. Whereas fine-tuning has complications in scaling, as if you add new data, it requires heavy infrastructure and elevated costs.

Here is a quick comparison between various approaches for your LLMs for your better understanding:-

| Criteria | RAG | Fine-Tuning |

|---|---|---|

Cost | Cheaper, no need to retrain the model, just manage external data. | Expensive, requires retraining, and computing power. |

Performance | Great for dynamic, knowledge-heavy tasks. | Strong for domain-specific, consistent behavior. |

Scalibility | Easy to scale as it just expands the knowledge base. | Harder to scale as retraining gets heavier as data grows. |

Accuracy | High accuracy if the external data is reliable and updated. | High accuracy in specialized tasks once trained. |

Use Cases | Customer support, research, Q&A, and fast-changing data tasks. | Healthcare, finance, legal, or any domain that requires deep specialization. |

RAG vs Fine-Tuning: Which One Fits Your Needs?

You might be constantly struggling to choose between RAG and fine-tuning. Well, it itself is a headache, as how can you even decide between flexible adaptation and super specialization? Both have their own strengths and weaknesses; you just need to come up with the one that aligns with your business needs. Well, here are the rag vs fine tuning pros and cons:

1. Flexibility

Well, as RAG relies upon the knowledge from external datasets, it comes out with the current responses. And, this saves your bank as you don't have to spend on retraining the model for every other information.

2. Potential Weakness

Now, the reliability and validity of the external source your RAG relies upon can be questioned. Answers can never be consistent if an external source has inefficiencies.

3. Core Strength

You just need to train it once, and after that, this model adapts your tone and terminology like an expert. It is a go-to for the industries where accuracy and consistency play a major role.

4. Weak Point

Well, you might end up spending half of your life savings on powering the fine tuning with new data. And those efforts might cause you real pain, as it still won't adapt that quickly.

How RAG and Fine-Tuning Help Your Business?

If you are a business that completely relies upon the dynamic world of information, RAG needs to be your smart choice. It performs like an expert in customer support, dynamic data, and apps that require a lot of knowledge. This is your friend who is always updated on every topic.

Furthermore, fine-tuning delivers its best when your model needs to excel in a specific domain and nail down with precision. You just need to train it once, and then it's there for you with lightning results, accuracy, and consistency. Just like a perfect match for industries such as law, healthcare, and finance. That clears a lot of air on when to use rag vs fine tuning.

Lastly, enterprises usually make a cautious choice between adaptability and precision. RAG offers you its scalability and agility for evolving data, while fine-tuning provides stability and specialization for niche tasks. So just blindly go with RAG for freshness and fine-tuning for expertise.

RAG vs Fine-Tuning: Which One Saves More on Cost?

Well, when the matter is about cost and performance, RAG and fine-tuning play in very different teams. RAG saves your bank by skipping retraining and instead going straight for an infrastructure for retrieval. Fine-tuning, on the other hand, demands heavy computing inputs but treats you afterwards with lightning-fast, specialized performance for long-term ROI. Their use of conversational AI development services is also clearly split depending on your business needs.

1. Cost Analysis

RAG is a loyal friend for your wallet, while fine-tuning can expand your bills for personal training, but it also has lower inference costs when seen in the long run. So in this rag vs fine tuning cost comparison, your actual needs win the case.

2. Performance Differences

RAG outshines fine tuning with its fast approach for reliable answers for every domain; however, fine tuning responds in no time once you train it.

3. Practical Applications

RAG uses chatbots of large enterprises, research agents, and healthcare Q&A. However, fine-tuning marks its major presence in law, medical diagnosis, and finance sectors. These rag vs fine tuning use cases highlight the urgency of this choice.

Well, at the end of the day, it’s not about which approach you find "better”, but which actually caters to all your needs. If you want fast and updated responses, RAG is a clear winner. But if you want stable accuracy and consistency, mastering a niche in fine-tuning steals the game.

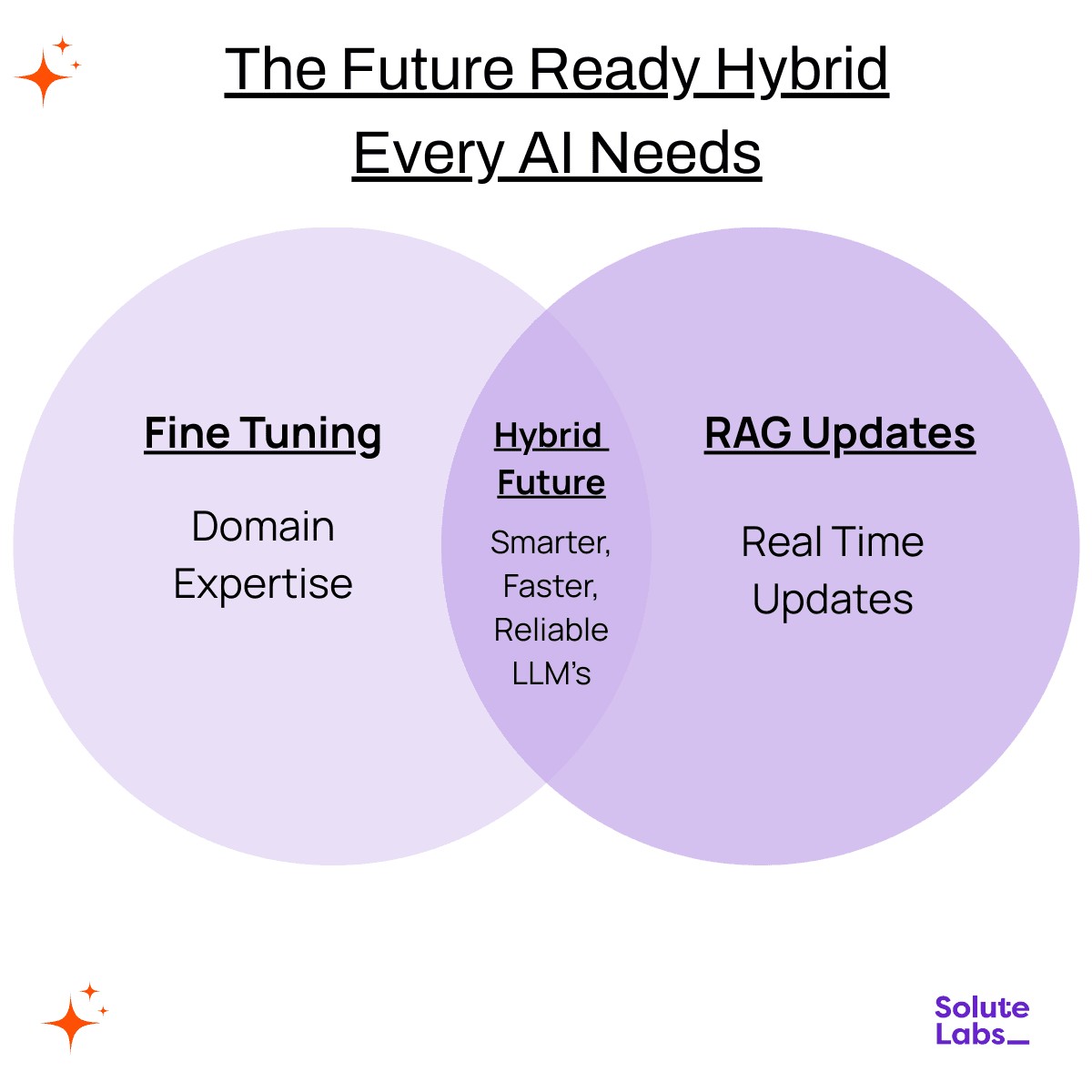

The Future Ready Hybrid Every AI Needs

Just give it a thought: fine-tuning caters your LLM with a strong foundation in domain-specific knowledge, while RAG keeps it interesting with constant live information. Now, instead of treating them like some rivals, merging these two could create a model that’s smarter, faster, and more reliable than ever.

Imagine a medical chatbot that’s fine-tuned on complex terminology but also taps into the latest research papers through RAG. Or an enterprise assistant trained on company data but updated daily with external market insights. That’s like having both a memory palace and a live news feed in one brain.

The future might not be about RAG versus fine-tuning at all, but about using both AI agent development solutions for balance. Also, by studying rag vs fine tuning examples, your head must be clear by now. Static knowledge plus dynamic retrieval means your LLM never feels outdated, and that’s the real win for businesses and users alike.

Conclusion

RAG makes your LLM street-smart, while fine-tuning turns it book-smart. So, just go and choose your vibe, be it flexible updates or rock-solid expertise. Honestly, the real magic works when you blend both of the approaches and let your model flex like an AI superhero.

At SoluteLabs, we don’t just talk about RAG vs Fine-Tuning to help you make the right choice for your business. We build artificial intelligence solutions that are flexible, precise, and ensure that your LLMs are always smarter, faster, and future-ready.

Why settle for ordinary when you can partner with us and reveal the potential AI holds? Book a consultation with us and turn AI potential into result