Most AI projects never make it past the prototype stage. Not because the models are weak or the engineers are inexperienced, but because teams try to approach AI the same way they have always built software. Delivering real AI-driven products requires new structures, workflows, and ways of measuring success.

The momentum is clear. In 2025, 78% of companies worldwide reported using AI in at least one part of their business, signaling that organizations are moving rapidly beyond pilots toward full-scale AI integration.

In this blog, we will map out a practical roadmap for executives. From understanding what it means to be AI-native, to building an AI product engineering team, hiring the right skills, and avoiding common pitfalls, it’s a hands-on guide to turning AI plans into a production-ready reality.

What AI-Native Actually Means in Production?

In production, AI-native doesn’t begin with choosing a model or setting up a tool. It begins with a simple question: what part of this product feels heavier than it should? That’s usually where teams start. It might be manual reviews, slow iterations, support bottlenecks, or decision-making that relies too much on gut feel. AI fits best where friction already exists, not where teams are trying to prove they’re “doing AI.”

In the first month, the team is rarely impressive on paper. It’s usually a small group figuring things out as they go. A product lead who understands the problem, a couple of engineers who are curious and adaptable, and access to AI expertise somewhere in the mix. By month six, things look different. Roles become clearer. Someone owns how AI behaves in production. Someone else worries about quality and edge cases. The team hasn’t just grown; it’s learned what actually matters.

Workflows also change faster than people expect. From day one, planning conversations feels different. Instead of asking “can we build this,” teams ask “how confident are we in this output?” Reviews aren’t only about clean code anymore; they’re about whether the system behaves sensibly when real users show up. Teams that succeed accept early on that AI work is messy, iterative, and never truly finished.

Where teams go wrong is usually not technical. They either try to build everything themselves before they’re ready, or they hand too much over to tools or partners without real ownership. The teams that find their footing make intentional choices early about what to build, what to lean on partners for, and what knowledge must stay in-house. Production-ready AI isn’t about being perfect. It’s about being prepared to learn fast once real users are involved.

Deciding Where AI Belongs in Your Product

A good way to think about this is to start with the parts of your product that already feel hard to manage. Places where teams spend too much time reviewing, sorting, explaining, or making the same decision again and again. That’s usually where AI helps. It can take the first pass, reduce manual effort, or give users a quicker answer without needing everything to be perfect.

Where AI usually doesn’t belong is anywhere that requires strict rules or zero tolerance for error. Payments, permissions, and pricing logic are better left to traditional systems. Often, AI works best when it supports a workflow instead of running it end-to-end. If you’re unsure how to make that call, this breakdown of AI-native vs. AI-enhanced approaches explains the difference clearly and can help guide those early decisions.

Your Build Strategy: In-House, Partner, or Hybrid

There’s no one-size-fits-all answer to building AI capabilities. The right choice depends on how fast you need to ship, how much AI experience your team has, and how much risk you can afford early on. Here is a comparison to help you decide whether building AI capabilities in-house makes sense for your product or whether partnering externally would lead to faster, safer progress.

Suggested Read

How to Choose the Best Programming Language for Your AI Project?

View Blog

Build vs Partner: How to Choose the Right Build Model For Your AI Team?

| Aspect | In-House Build | Partner or Hybrid |

|---|---|---|

Time to First Production Development | Slower due to setup and experimentation | Faster using proven delivery patterns |

Team Ramp-Up & Learning Curve | Steep learning curve for AI in production. | Shortened learning through experienced guidance. |

Speed for Iteration After Launch | Improves gradually over time. | Faster post-launch iteration early on. |

Upfront Cost (0-6 months) | High investment in hiring and setup. | More predictable short-term costs. |

Sustained Costs (12+ months) | Lower once internal capability matures. | Higher if dependency continues. |

Primary Technical Risk | Architectural mistakes and poor model evaluation. | Dependency risk if ownership is unclear. |

Quality Control Maturity | Needs to be built from scratch. | Often comes with existing standards and checks. |

Hiring Pressure | High pressure to find rare AI talent. | Reduced immediate hiring needs. |

Internal IP Control | Full control from day one. | Shared early, can transition in-house. |

Scalability Without MVP | Slower without prior AI systems experience. | Easier to scale with established patterns. |

The Minimum AI-Native Team That Actually Ships

In order to bring AI into production, you do not require a big team; what you need is the right mix of ownership, technical judgment, and accountability. Small teams are more efficient in their work and get results faster than large teams, which are difficult to manage.

- Product Leadership: Converts the business priorities into clear objectives for the team, thereby ensuring that AI efforts lead to real outcomes such as increased revenue, efficiency, or better user experience.

- AI/ML Engineering: Develops, integrates, and manages models and pipelines while coordinating with data and software teams in order to maintain performance that is reliable in production.

- Software Engineering: Incorporates AI results into the product, makes sure that the product is scalable, maintains system integrity, ensures that the product is compliant with regulations, and uses AI to speed up development.

- Data Science & Analytics: Takes care of data quality, evaluates models, tracks performance, and ensures that AI systems keep learning from user behavior.

- Design & User Experience: Converts AI potentials into understandable user interactions by providing automation together with human control to give the maximum value without causing any inconveniences.

- Platform & DevOps: Takes care of the infrastructure, pipelines, observability, and scaling in order to keep the models running efficiently and reliably.

How AI-Native Teams Actually Work Day-to-Day?

Here is a closer look at what an AI-native team actually does from day one through the first six months. Understanding this progression helps leaders see how small teams evolve into production-ready units and avoid common early pitfalls.

Month 1–2: Learning by Doing

- Team size: 3–5 people, roles intentionally blurred

- Engineers alternate between product code and manual AI output review

- Product reviews every AI response before release

- Decisions driven by the first 50–100 real user interactions

- Prompts and logic iterate 10–20× per week

- No formal definition of “good enough.”

- Failures detected manually by the team

- Primary focus: understanding AI behavior in real workflows

Month 3–5: Transition to Production Readiness

- Patterns emerge from real usage data

- Evaluation frameworks and quality criteria are formalized

- Ownership becomes explicit (AI behavior, quality, edge cases)

- Targeted hires made if needed (ML engineer, AI product owner)

- Manual reviews begin to reduce, but remain in critical paths

- Teams that don’t stabilize here tend to stall long-term

Month 6+: Confident Execution

- Team size remains stable; clarity increases

- Explicit owner for AI behavior in production

- Defined error thresholds and confidence scores

- Prompts versioned; changes limited to 2–3× per month

- Automated evals run hundreds of scenarios on every change

- Monitoring detects drift and failures before user impact

- AI treated as infrastructure, not experimentation

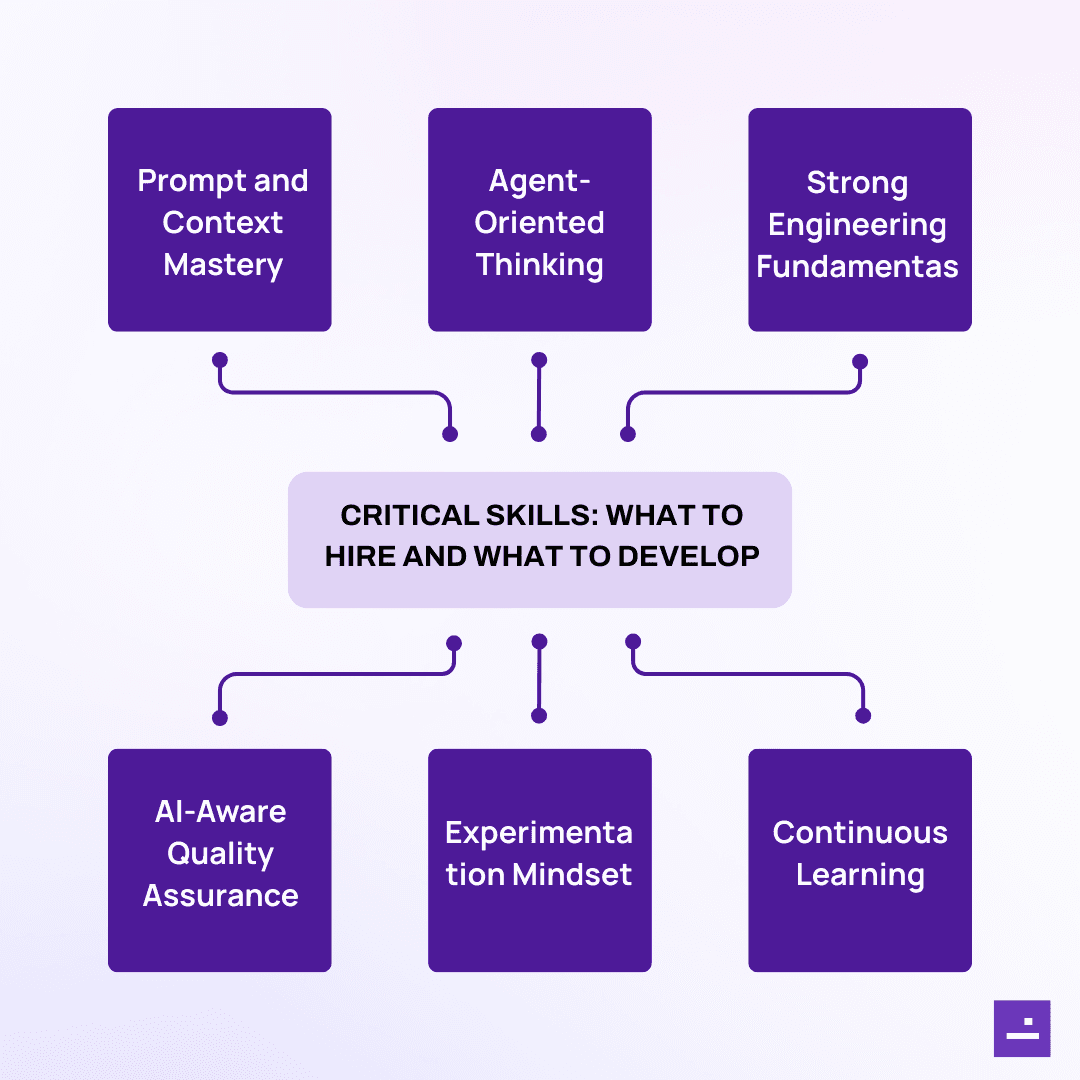

Critical Skills: What to Hire and What to Develop

When your organization adopts a modern AI-native workflow, success depends as much on how people think and work as on what tools they use. The following skills reflect what truly matters when building high-impact AI-enabled development teams.

1. Prompt and Context Mastery

Teams must be capable of producing well-defined prompts and delivering organized context in order for AI agents to yield precise and consistent results. This is essentially a core component of the skill set of AI development teams.

2. Agent-Oriented Thinking

Developers are expected to have the knowledge of how to manage AI agents, use of tools, and human supervisory control within a single flow of work, rather than just writing code.

3. Strong Engineering Fundamentals

Engineers, despite the intervention of automation, are still required to verify the work produced by AI, improve the architecture, maintain the quality, and take care of the system's integrity in the long run.

4. AI-Aware Quality Assurance

The QA role must be transformed in a way that it becomes an AI-assisted testing, scenario creation, edge-case recognition, and model behavior continuous monitoring function.

5. Experimentation Mindset

Such teams should be able to operate in quick cycles, testing small ideas, getting the results straight away, and continuously refining features as models develop.

6. Effective Partner Collaboration

Companies must have the capability of collaborating with an external AI development partner when they need to scale, speed up the delivery, or get access to the advanced expertise.

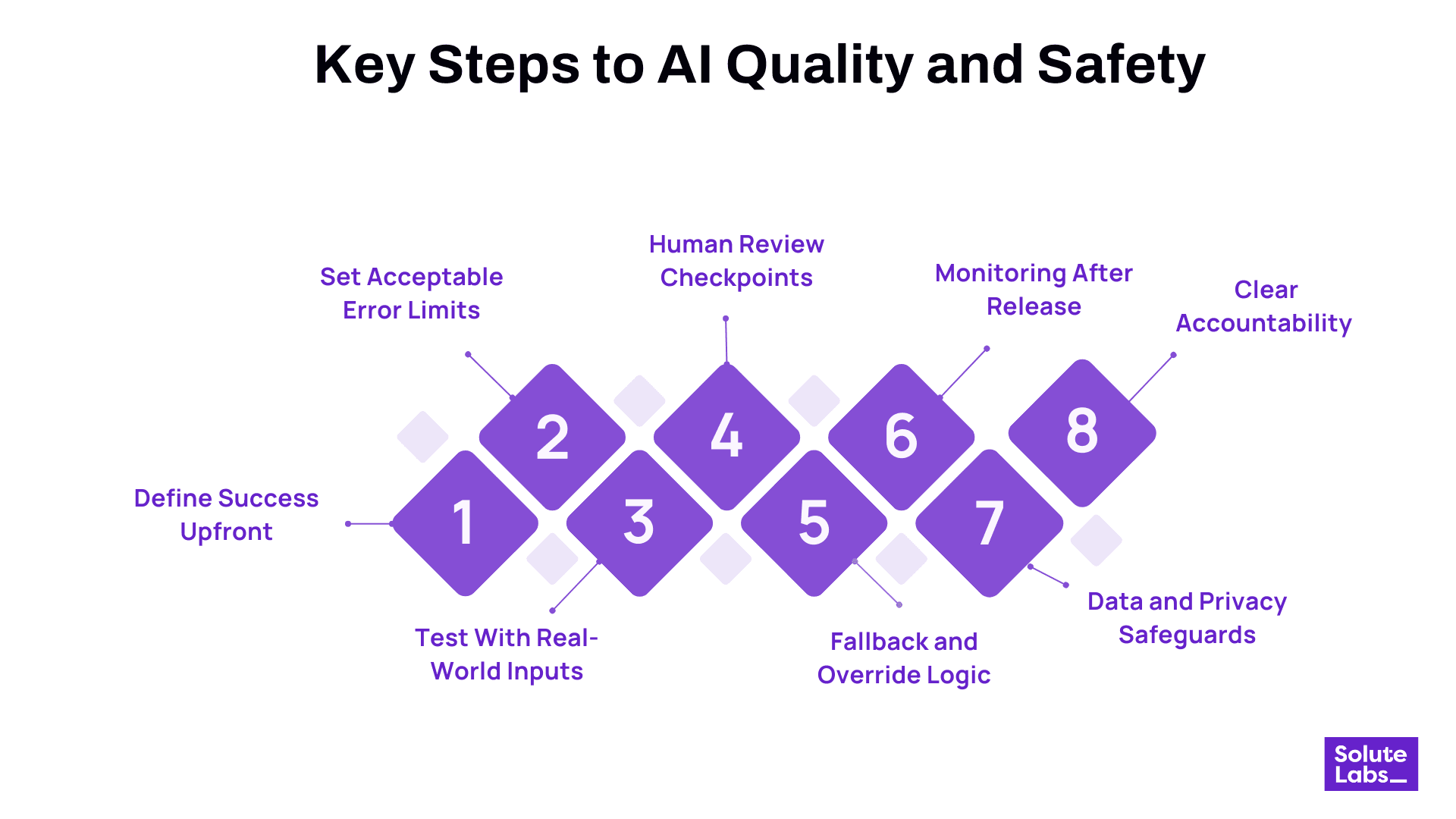

Evaluation, Quality, and the Necessary Guardrails Prior to Production

1. Define Success Upfront

It is very important that you figure out what success means before you release anything. That might be accuracy ranges, response relevance, completion rates, or even the reduction in manual effort. If success can not be quantified, it can not be evaluated.

2. Set Acceptable Error Limits

Artificial intelligence will not be perfect all the time. The location of errors where they are acceptable and where they are not should be decided by the teams. This avoids the situation where a small issue is unnecessarily blown out of proportion and, at the same time, serious problems are detected at a very early stage.

3. Test With Real-World Inputs

Internal test data alone is not sufficient. Tests should be done using real user queries, unclean data, and edge cases to understand the behavior of the system under actual conditions.

4. Human Review Checkpoints

At the moment when the system is completely automated, human validation should still be present at important stages. This is, in particular, the case for early releases and high-impact workflows.

5. Fallback and Override Logic

If confidence is low, there should be a well-defined fallback for each AI capability. The failure of AI should never be the cause of a user being blocked.

6. Monitoring After Release

Performance evaluation after the release is not the end of the line. Ongoing performance tracking is required as well as coeval alerting for drift, unexpected behavior, or gradual deterioration.

7. Data and Privacy Safeguards

Make sure that sensitive data is stored properly, controls are put in place to manage the logs, and restrictions are set up for access. These guardrails not only safeguard the business but also the users.

8. Clear Accountability

There must be a person who is responsible for model behavior, quality checks, and decision-making. Strong AI-native product teams consider evaluation and governance as a normal part of their daily routine, not as a one-time checklist.

How AI-Native Teams Evolve as They Scale?

The main difference that an AI-native team makes when they grow is not their size but their clarity. Small teams normally depend on shared understanding and casual decision-making; however, expanding requires more precise control, repeatable processes, and common standards. The few generalists that were handling everything will gradually become more structured with clearer accountability around data, model behavior, and product impact.

Eventually, the journey of well-performing teams leads them to move beyond mere experimentation to discipline. Assessment methods become more sophisticated, workflows more consistent, and knowledge more recorded so that newcomers can get familiar with the team faster. This transition is what enables an AI-native organization to scale AI across products while maintaining the pace, quality, and trust in production systems.

The Anti-Patterns That Kill AI Teams

Even powerful teams are capable of failing if they happen to fall into these common traps. The avoidance of these anti-patterns at the beginning can help your AI-native product teams to be able to deliver consistent, scalable results.

- Treating AI as a Side: Simply putting AI onto a new product without consideration of changing the workflow stagnates the adoption, and the impact is lowered.

- Unclear Ownership: The absence of features leads to errors and a decrease in the level of accountability. Teams should definitely understand which department the owner of the model behavior and results is from.

- Over-Centralization: Determining and approving decisions in a bottlenecked manner causes the team to lose the speed and agility necessary for them to be able to experiment safely, and thus, they are confined.

- Tool Dependency: The excessive reliance on an external partner or a pre-built tool without a knowledge transfer process may gradually create a long-term dependency situation.

- Ignoring Feedback Loops: AI is a system that, through constant monitoring, evaluation, and iteration, is capable of delivering positive results. When someone does not perform these tasks, the result is model degradation and trust erosion.

Final Words

Creating an AI-native product engineering team is a necessity for modern organizations to stay competitive. It is not an easy feat, as it requires a neat plan, the right combination of skills, and a culture that sees AI as a partner rather than just a tool. These companies focus on what is measurable, collaborate across functions, and keep iterating to have the maximum effect of AI on product development.

The move from conventional engineering to AI-native workflows is a change that requires you to think about the organization, roles, and processes that use human judgment together with AI-driven efficiency. If an organization embeds AI early, allows people to experiment, and looks first at business metrics, then it can have faster development cycles, better decision-making, and more innovative products.

At SoluteLabs, we empower companies to enhance their product development capabilities through our role as a trusted AI development partner. We offer the skill and support that are needed to speed up growth and innovation from the time of building AI-native teams till the time of integrating AI into workflows. Reach out to us if you want to know how we can be of help to your organization in building the AI-enabled products of tomorrow.