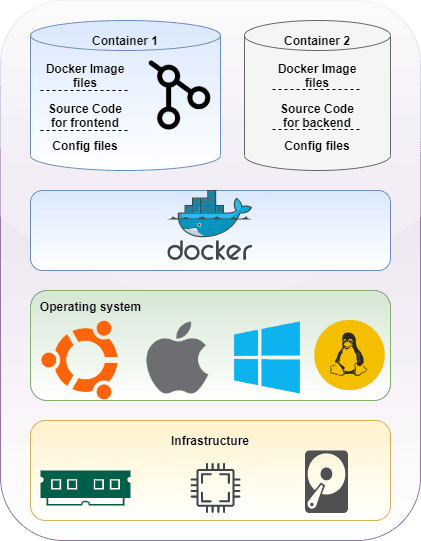

What is a container?

If you are an application developer or a system administrator in the 21st century you have probably come across the term containers. The container is nothing but an entity that comprises software packages tightly bundled together which can execute your code in an isolated environment. Unlike virtual machines which virtualize hardware resources to run multiple operating systems, containers virtualize the operating system to run multiple workloads on a single OS.

Now, just like in a production environment running a single VM instance is not enough to host your application, similarly, you need to manage multiple container instances in order to fulfill your microservice needs and get your application running. This can be very stressful and managing, monitoring and scaling 100s of containers can be a disaster when not done right. To manage your application well and provide 24X7 availability you need to think beyond just running your code in containers.

This is exactly where container orchestration tools like Kubernetes and Mesos fit in!

What is a container orchestrator and why do you need it?

· Container orchestrator is a tool that monitors and manages the complete lifecycle of your containers right from bringing it into the running stage until gracefully terminating your code and shutting down your container

· These tools probe for container’s health and keeps in check for application code running in your container

· In case of higher loads, these tools can efficiently scale out your application code to cater from 100 clients to millions of clients without any downtime

Unlike Docker which has been the undefeated platform for running containers, there are various orchestration tools that are available in market to cater to different needs as per your application’s current workflow and future needs. Today we take a look at two of the world’s most widely adopted and versatile orchestration tools: Kubernetes and Mesos, and which suits your application’s needs and meets your future demands the best.

Overview of Kubernetes

Kubernetes is an open-source container orchestration tool developed by Google and currently managed by Cloud Native Computing Foundation. Kubernetes provides container deployment, scalability, and management services, along with an exposed API core which enables developers to directly interact with Kubernetes control plane nodes to manage, create, configure Kubernetes clusters and integrate their systems with its core seamlessly.

Overview of Mesos

Mesos is an open-source cluster manager developed originally by UC Berkeley and currently owned by Apache. It is designed to handle both types of workloads, containerized or non-containerized running on distributed systems. Mesos marathon is the framework that enables container runtime in a Mesos cluster.

Kubernetes vs. Mesos

After having a brief look at Kubernetes and Mesos architecture diagram and some features, let’s now compare them in detail and choose the right one for your application workload.

1. Architecture

Kubernetes cluster comprises of two types of nodes: master and slave. Each node runs a specific set of services in order to form a cluster and provide a container orchestration platform.

Master nodes: these nodes are responsible for maintaining the state of the clusters and controls all the nodes in the cluster. Master nodes contain backed up cluster state in key-value store known as ETCD which can be setup along with master nodes or maintained separately on different nodes. Master node runs three major processes which are kube-apiserver, kube-scheduler and kube-controller-manager.

Slave nodes: these nodes are responsible for running your workload in containerized environment with help of these three major services namely kubelet, kube-proxy and container runtime(Docker preferably).

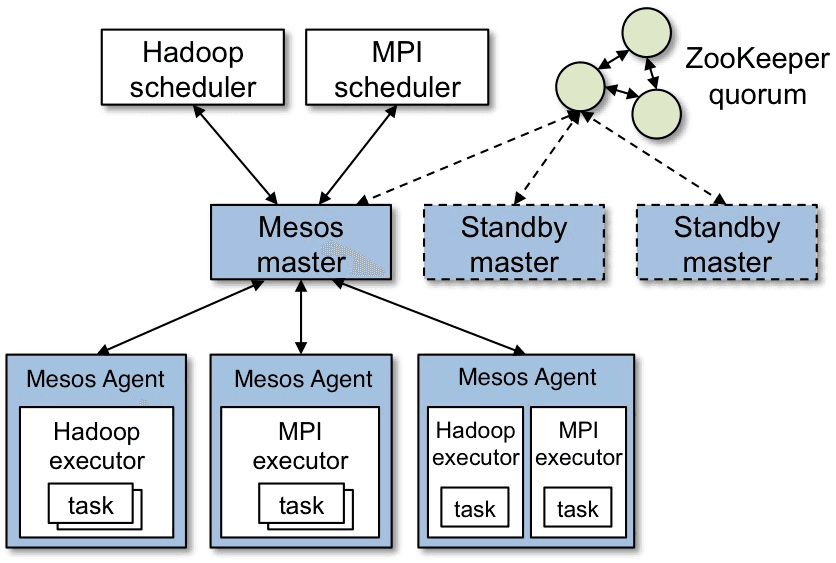

Mesos cluster comprises of master nodes and slave nodes, where master nodes are selected from a Quorum managed by zookeeper and slaves running Mesos agent for running the application workloads.

Master nodes in Mesos cluster are broadcasted with resources available by Mesos agent nodes and as per the tasks registered by schedulers a master nodes assigns the workload to the slave nodes which run the Mesos Executors.

2. Workloads

- As mentioned earlier Kubernetes supports containerized workloads and most popularly is used for Docker containers. Along with Docker, Kubernetes also supports container runtimes like containers and CRI-O.

- Contrasting to Kubernetes, Mesos is a framework that supports diverse workloads which can be containerized and non-containerized, where the containerized environment is supported in Mesos by an additional framework known as Marathon.

3. High Availability

- Kubernetes with its support for multiple master and multiple slave configurations provides high availability of workload runtimes. Also, the pods in Kubernetes are distributed over a cluster of worker nodes and hence a node failure is easily managed by moving a workload to another node.

- Mesos can have multiple master and slaves just like Kubernetes where the active master is decided by a quorum of zookeepers, and also Mesos provides high availability by providing multiple Mesos agents for running the distributed workload in a cluster.

4. Scaling

- In Kubernetes scaling is managed by an entity called Horizontal Pods Autoscaler. As seen in the below example nginx pods are monitored by HPA a have the capability to auto-scale if the load average increases above the set target limit which is 80 for our use case.

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS

nginx Deployment/nginx 10%/80% 1 10 1- In Mesos, the same is achieved by a scheduling framework that can alter the scale of the application through UI or through application definition.

{

"id" : "my app",

...

"instances" : "10",

...

}5. Failover

Nodes, processes, and workload failovers are managed both in Kubernetes as well as Mesos.

- Kubernetes manages application failover with running readiness and liveness checks and can automatically redeploy workloads as and when required. Also, the master election is self-managed in Kubernetes.

...

livenessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 15

periodSeconds: 30

successThreshold: 1

timeoutSeconds: 1

...

...

readinessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 15

periodSeconds: 30

successThreshold: 1

timeoutSeconds: 1

...- Following is an example of configuring readiness and liveness probes in a pod for monitoring the health stats of an application.

- Likewise in Mesos master nodes failover is managed by zookeeper quorum and the application failovers are monitored by the master nodes which maintains the state by the data published by the Mesos agents running on the worker nodes.

6. Upgrades and Rollbacks

- Kubernetes facilitates up-gradation of application pods through deployment entities where the deployment strategies and scale can be mentioned in the yaml files for unhindered and zero downtime deployments. The rolling back of upgrades in Kubernetes is possible using the kubectl rollback command which requires no editing of yaml files.

kubectl rollout history deployment/nodejs-app

kubectl rollout undo deployment/nodejs-app --to-revision=99- Mesos also provides the upgrades in an application through a deployment JSON file where 0 downtimes is achieved using the rolling starts feature of Mesos. Unlike Kubernetes, in order to perform rollback in Mesos, the deployment needs to be edited manually to perform rollbacks.

- Marathon adds the property minimumHealthCapacity to aid deployment, which when set to 0 deletes all previous deployments when creating a new one, whereas setting the same to 1 runs new deployment prior to deleting the old instances.

7. Persisting Data

- Kubernetes provides persistence of data in its containers by multiple storage solutions. As seen in the architecture diagram Kubernetes provides an entity called Persistent Volumes and its claims which can be linked with a particular pod and hence maintains data completely isolated from the application’s runtime. The solutions which are available and can be integrated with PVCs are Ceph storage, NFS mount points, AWS s3 storage, and many more.

...

volumeMounts:

- mountPath: /usr/share/elasticsearch/data

name: elasticsearch-data

- mountPath: /usr/share/elasticsearch/config/certs

name: elastic-certificates

- mountPath: /usr/share/elasticsearch/config/elasticsearch.yml

name: esconfig

subPath: elasticsearch.yml

...

...

volumes:

- name: elasticsearch-data

persistentVolumeClaim:

claimName: efk-data-efk-data-0

- name: elastic-certificates

secret:

secretName: elastic-certificates

- configMap:

name: efk-data-config

name: esconfig

...- Following is an example of storing data for databases in persistent volumes which can be mounted in pods. In this example, we can see important config files, certificates, and data are mounted using volumes.

- Mesos on the other hand to manage the persistence of the data needs to access the physical storage local volumes of the agent on which the application is being run and hence makes it cumbersome for managing data in a cluster.

{

"container_path": "/var/lib/elasticsearch",

"mode": "RW",

"source": {

"type": "HOST_PATH",

"host_path": {

"path": "/usr/src/elasticsearch"

}

}

}8. Networking

- Networking in Kubernetes is facilitated by a model which enables all pods and containers in a cluster to interact with each other with their own unique ports. This is possible since Kubernetes create two layers of the network, one for the pods and the other for the services. This helps in emphasizing on security aspect since the networks are unreachable from outside the cluster and enable full-fledged communication between all the application containers.

apiVersion: v1

kind: Service

metadata:

labels:

release: logstash

name: logstash

spec:

ports:

- name: beats

port: 5044

protocol: TCP

targetPort: 5044

selector:

release: logstash

type: ClusterIP- As seen following code is a definition for mapping an application’s port to a service port in Kubernetes using a yaml file

- Mesos facilitates this implementation by mapping the container ports to the host ports which are limited and is one of the major drawbacks of its architecture. Because of this setup, the containers even in the same cluster cannot communicate with each other.

Using Kubernetes and Mesos on Azure

- Kubernetes on Azure offers a serverless Kubernetes experience with a wide variety of plugins available for setting up CI/CD and securely exposing your applications over the internet.

- Mesos on Azure is offered as Mesosphere DC/OS service which provides complete cluster manager services with a Web UI for controlling over 10,000 nodes in a cluster.

- The primary competition for these services on Azure is the Azure Container Service which provides a lightweight runtime environment for containers that offers scaling and management functionalities using Mesos and docker swarm.

Azure container service is easy to set up and deploy lightweight container service but lacks the features of auto-scaling, self-healing, and networking, hence use of Kubernetes and Mesos on Azure provide many advantages which can be summed up as,

- Elastic distributed system meaning it is easy for these services to scale and manage on-demand

- Provided with simplified Web UI

- Fault-tolerant architecture which provides availability zones in case of services being affected by natural disasters

- Ease of integration with plugins and services

- Designed with Continuous Delivery and Continuous Integration tools inbuilt

- Enterprise-grade security and governance of services

Cons of using Kubernetes and Mesos on Azure,

- These services are not suitable for running on a long term basis

Using Kubernetes and Mesos on AWS

Both Kubernetes and Mesos on AWS manages a cluster formed by Amazon EC2 instances running container instances on them.

- Amazon Elastic Kubernetes Service (Amazon EKS) provides a flexible, one-click approach to deploy and run Kubernetes clusters on-premise or on the Amazon cloud. This service runs by running containers on EC2 instances in a logical entity called pods managed by control plane software.

- Apache Mesos in AWS provides a platform for running big data applications on the cloud with shared containers and non-container entities managed under a single cloud data cluster. It runs on Amazon’s EC2 instances abstracting its compute resources to provide an easily built, fault-tolerant, and distributed systems environment for running applications efficiently.

- Elastic container service is the Amazon-provided container orchestration solution that is well integrated with Amazon cloud services and proves as a good alternative for Kubernetes. Since ECS provides more flexibility and seamless integrations with Amazon Route 53, Secrets Manager, AWS Identity and Access Management (IAM), and Amazon CloudWatch, it proves to be a better solution compared to EKS.

- Mesos acts as a container engine whereas ECS acts as a container orchestrator in AWS. When it comes to managing thousands of nodes and hybrid big data workloads Mesos is a clear winner, but for running container instances for a limited user base ECS is a better option.

- In general, ECS provides a better pricing model and more wide variety of service integrations hence is a better alternative for EKS and Mesos on AWS.

When to use which?

Kubernetes runs the containerized workload on a single node or can range to a set of nodes forming a cluster hence, it is best suited for organizations and start-ups migrating from application deployments on small scale to cloud-native deployments. Kubernetes aids in the initial lifecycle of application development since it is lightweight, easy to deploy, and helps developers in their initial stage of working on cluster-oriented deployments. Backed with a large pool of development communities, Kubernetes provides a vast variety of plugins and integrations as compared to its competition.

If you have an existing workload that needs to be integrated with your currently developing containerized application then Mesos helps you leverage its hybrid distributed systems orchestration design, by providing a framework to host all the workloads in a single cluster. Mesos is desirable when you require a stable platform with a high number of nodes, typically over 20 in a cluster. Mesos has a steep in-depth learning curve for developers in order to harness the full potential of the Mesos cluster.

Conclusion

In conclusion, Kubernetes and Mesos are completely different frameworks that enable orchestration of Docker containers finding the similarity in providing scalability, portability, and isolated workspace for running your workloads.

Kubernetes turns out to be a fan favorite, provides a simple to use architecture with many benefits for beginners in cloud development, whereas Mesos is a robust and heavyweight framework that is best suited for organizations with the need of existing application migration and integration with the containerized environment.